Time Series Analysis: Advanced Big Data Processing Methods

Master Time Series Analysis! Explore advanced big data processing methods for high-volume, real-time data. Learn scalable algorithms to transform your analytics.

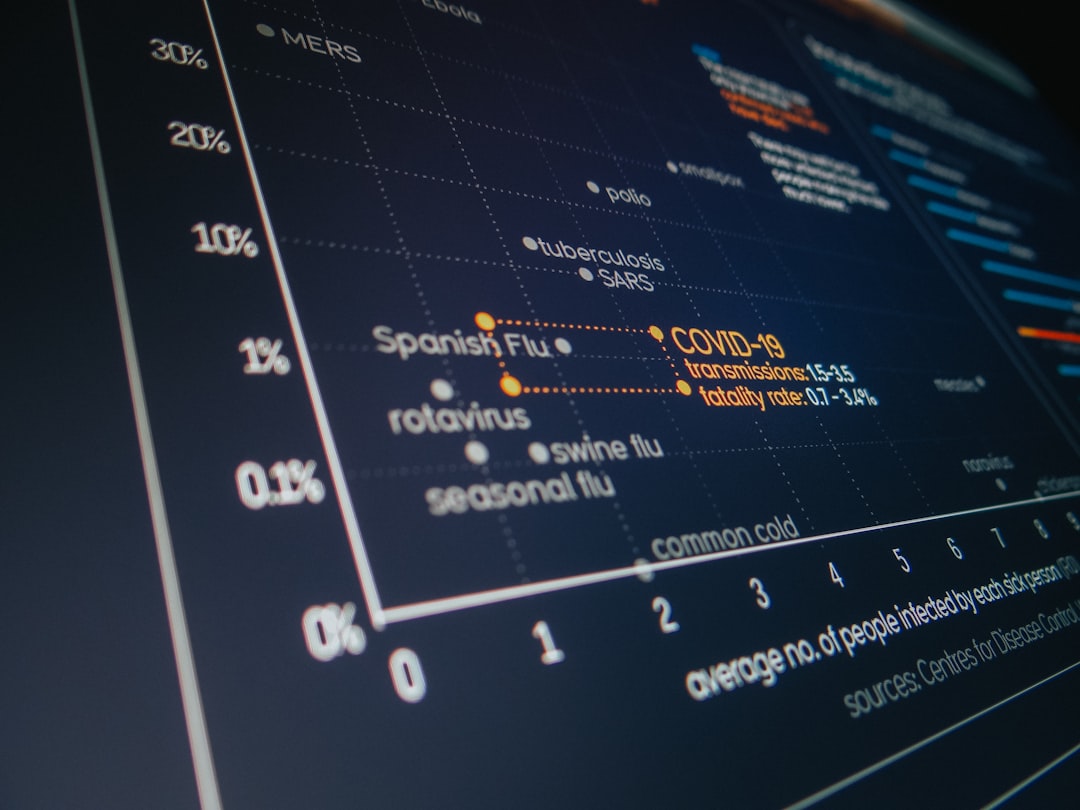

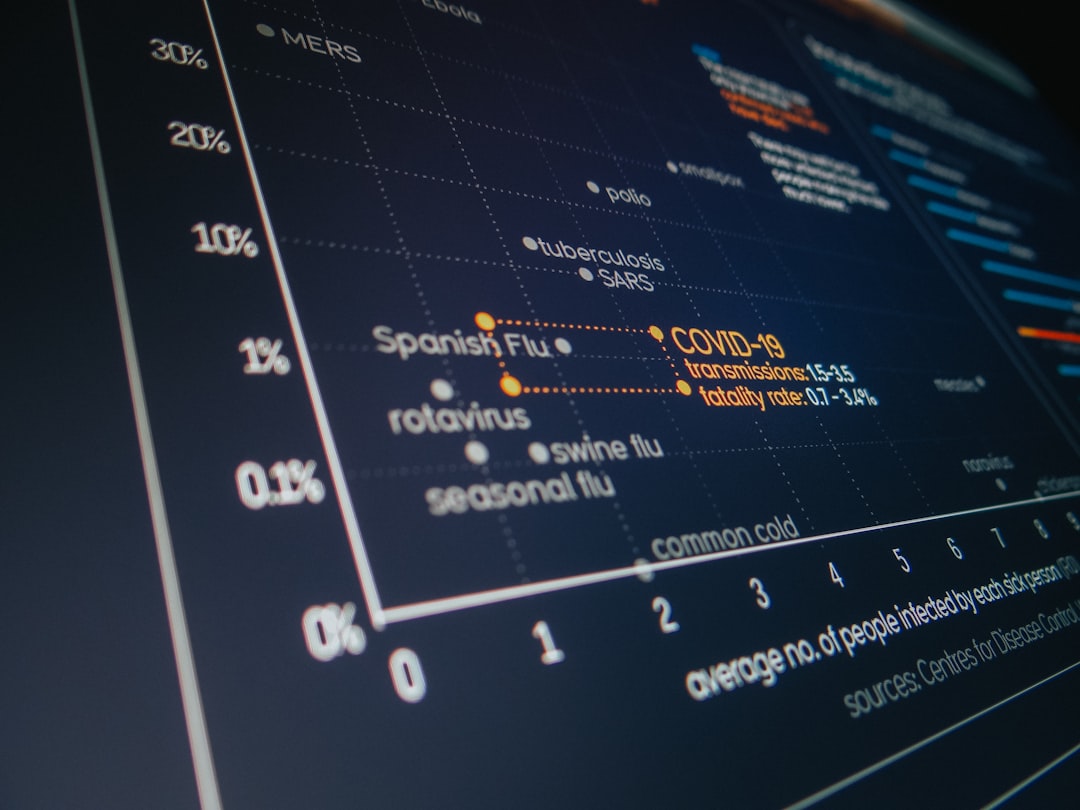

In an increasingly digitized world, data is generated at an unprecedented pace, with a significant portion arriving in the form of time series. From IoT sensors monitoring industrial machinery and smart city infrastructure to high-frequency financial transactions, patient health records, and global web traffic logs, time-stamped data is the heartbeat of modern operations. The ability to collect, process, and analyze this data effectively is no longer a luxury but a fundamental necessity for businesses seeking a competitive edge. Traditional time series analysis techniques, while powerful for smaller datasets, often buckle under the immense challenges posed by Big Data – characterized by its sheer volume, velocity, and variety.

The transition from megabytes to petabytes, from batch processing to real-time insights, demands a paradigm shift in how we approach time series analysis. Organizations today require advanced methodologies that can not only handle the scale but also extract meaningful, actionable intelligence with minimal latency. This involves leveraging distributed computing frameworks, specialized databases, cutting-edge machine learning algorithms, and robust cloud-native architectures. The goal is to move beyond simple historical reporting to predictive analytics, anomaly detection, and decision support systems that operate at the speed of business.

This comprehensive article delves into the sophisticated world of Time Series Analysis in the context of Big Data. We will explore the inherent challenges, dissect advanced processing methods, and illuminate the tools and techniques that empower data scientists and engineers to unlock the full potential of high-volume, high-velocity time series data. From scalable algorithms to real-time analytics and the pivotal role of cloud computing, we aim to provide a holistic view of the state-of-the-art in this critical domain, equipping readers with the knowledge to build robust, future-proof solutions.

The digital transformation has reshaped nearly every industry, turning once static data points into continuous streams of time-stamped events. This proliferation of time series data brings with it both immense opportunities and significant processing hurdles. Understanding these challenges is the first step towards building effective, scalable solutions.

The classic \"3Vs\" of Big Data are acutely relevant to time series. Volume refers to the sheer quantity of data generated. Consider smart grids collecting millions of electricity meter readings every second, or autonomous vehicles generating terabytes of sensor data per hour. This massive scale quickly overwhelms traditional single-machine processing capabilities. Velocity addresses the speed at which data is generated and must be processed. Real-time fraud detection in financial transactions or immediate alerts from critical infrastructure sensors demand processing within milliseconds, not minutes or hours. Finally, Variety highlights the diverse formats and sources of time series data. It can range from structured numerical readings to semi-structured log files, unstructured text streams, or even image sequences, each requiring different ingestion and processing strategies.

Conventional time series models like ARIMA (AutoRegressive Integrated Moving Average), exponential smoothing, or even Prophet, while effective for stationary or well-behaved series, often fall short in the Big Data paradigm. Their primary limitations include:

These limitations necessitate a fundamental shift towards methodologies that embrace parallelism and distributed architectures.

In today\'s competitive landscape, organizations cannot afford to ignore the insights hidden within their time series data. Scalable solutions are imperative for several reasons:

To overcome the limitations of traditional methods, distributed computing frameworks have become indispensable for processing High-volume Time Series Data Processing. These frameworks enable the parallelization of tasks across clusters of machines, allowing for efficient handling of massive datasets.

Apache Hadoop laid the groundwork for Big Data processing. Its core components include:

While direct MapReduce usage for complex time series analysis is less common today, HDFS remains a vital component for data lakes storing historical time series data, providing a robust foundation for other processing engines.

Apache Spark has emerged as the most popular and versatile distributed computing framework for Big Data analytics, including complex time series workloads. Its key advantages include:

For Scalable Time Series Algorithms, Spark is invaluable. For instance, to calculate rolling averages or detect anomalies across millions of sensor readings:

# Example: Calculating a 24-hour rolling average on sensor data using PySpark from pyspark.sql import SparkSession from pyspark.sql.window import Window from pyspark.sql.functions import col, avg, lag, current_timestamp spark = SparkSession.builder.appName(\"Time_Series_Rolling_Average\").getOrCreate() # Assume \'sensor_data.csv\' has columns: device_id, timestamp, value df = spark.read.csv(\"s3://your-bucket/sensor_data.csv\", header=True, inferSchema=True) df = df.withColumn(\"timestamp\", col(\"timestamp\").cast(\"timestamp\")) # Define a window partitioned by device_id, ordered by timestamp, # covering the last 24 hours (86400 seconds) window_spec = Window.partitionBy(\"device_id\").orderBy(\"timestamp\").rangeBetween(-86400, 0) # Calculate the rolling average df_with_rolling_avg = df.withColumn(\"rolling_avg_24hr\", avg(col(\"value\")).over(window_spec)) df_with_rolling_avg.write.parquet(\"s3://your-bucket/processed_sensor_data.parquet\", mode=\"overwrite\") spark.stop() This PySpark example demonstrates how to perform a common time series operation—calculating a rolling average—efficiently on a large dataset using Spark\'s window functions, which are highly optimized for such tasks across distributed data.

Storing and querying massive volumes of time series data efficiently is paramount. Traditional relational databases often struggle with the high ingest rates and specific query patterns inherent in time series workloads. This has led to the rise of specialized Time Series Databases (TSDBs) and cloud-native storage solutions.

Relational databases typically face several challenges with time series data:

Time Series Databases (TSDBs) are purpose-built to address these issues. They are optimized for:

Cloud providers offer managed services that simplify the deployment and scaling of TSDBs and related storage solutions:

| Feature | InfluxDB | Prometheus | TimescaleDB | Amazon Timestream |

|---|---|---|---|---|

| Type | Open Source, Standalone TSDB | Open Source, Monitoring TSDB | Open Source, PostgreSQL Extension | Managed Cloud Service |

| Primary Use Case | IoT, Monitoring, Analytics | System Monitoring, Alerting | Relational DB with TS capabilities | IoT, DevOps, Industrial Telemetry |

| Query Language | InfluxQL, Flux | PromQL | SQL | SQL (ANSI 2003) |

| Data Model | Tags (metadata), Fields (values) | Metric name, Labels (key-value pairs) | Relational (tables with time index) | Measure name, Dimensions, Measure Value |

| Scalability | Clustering (Enterprise) | Federation, Sharding | Horizontal scaling (via chunks) | Serverless, auto-scaling |

| Key Strengths | High ingest/query speed, data retention | Powerful query language for monitoring, alerting | SQL familiarity, ACID transactions | Fully managed, serverless, cost-effective |

The ability to process and analyze time series data as it arrives, rather than in batch, is critical for many modern applications. Real-time Time Series Analytics enables immediate insights, proactive responses, and enhanced decision-making.

Achieving real-time analytics typically involves stream processing architectures:

The core of real-time time series analytics often revolves around detecting anomalies and making rapid forecasts:

Case Study: Real-time Fraud Detection in Financial Transactions

A major financial institution implemented a real-time fraud detection system for credit card transactions. Every transaction event (volume: millions per second) is ingested into Apache Kafka. Apache Flink processes these streams, maintaining a profile for each user and merchant, including historical spending patterns, locations, and transaction frequencies. Flink jobs continuously evaluate incoming transactions against these profiles using rules-based engines and real-time machine learning models (e.g., Isolation Forest, One-Class SVM). Transactions flagged as suspicious are immediately routed for human review or automated blocking, drastically reducing financial losses and improving customer security. This entire process, from transaction swipe to fraud alert, occurs within milliseconds, demonstrating the power of Real-time Time Series Analytics.

For operations teams and business users, real-time data is only valuable if it can be queried and visualized quickly. This involves:

Leveraging the power of machine learning and artificial intelligence is paramount for extracting deeper insights, making accurate predictions, and automating decision-making from high-volume time series data. The challenge lies in scaling these sophisticated models to Big Data dimensions.

Applying classical machine learning to Big Time Series requires distributed approaches:

Deep learning models excel at capturing intricate, non-linear patterns and long-range dependencies often present in complex time series data, especially when dealing with high-dimensionality or multi-variate series:

Example: Predicting Energy Consumption Patterns with Distributed LSTMs

A smart city initiative aims to predict hourly energy consumption for thousands of buildings to optimize resource allocation and reduce waste. The raw data consists of half-hourly meter readings, weather data, and building metadata, accumulating to terabytes. To handle this High-volume Time Series Data Processing, a distributed deep learning approach is used. Data is ingested and preprocessed using Apache Spark to generate features (lagged consumption, weather forecasts, day-of-week indicators). This prepared data is then fed into a distributed training pipeline using TensorFlow or PyTorch, orchestrated by Spark or Kubernetes. Multiple LSTM models, or a single large distributed LSTM, are trained in parallel, leveraging GPUs across the cluster. The trained models can then predict future energy loads for individual buildings or aggregated regions, enabling proactive energy management. This approach combines the scalability of Spark for data preparation with the predictive power of deep learning for complex time series patterns.

The complexity of building, deploying, and managing time series models at scale necessitates robust practices:

The cloud provides an unparalleled environment for processing and analyzing Big Time Series Data, offering elastic scalability, managed services, and reduced operational overhead. Adopting cloud-native strategies is key to operationalizing advanced time series analytics.

Serverless computing allows developers to build and run applications and services without managing servers. Cloud providers handle the underlying infrastructure, scaling, and maintenance. For time series processing, serverless functions are ideal for:

Cloud providers offer a rich ecosystem of fully managed services that significantly simplify the deployment and management of complex Big Data architectures for time series:

The benefits of using managed services include reduced operational overhead, higher reliability, built-in security, and the ability to focus engineering resources on building value-added analytics rather than infrastructure management.

Operationalizing advanced time series analytics requires robust practices beyond just processing and modeling:

The field of time series analysis is continuously evolving, driven by new technological advancements and an increasing awareness of the societal implications of data-driven insights. Looking ahead, several trends will shape how we approach Advanced Big Data Processing for time series.

As the number of IoT devices explodes, processing data closer to its source is becoming critical. Edge Computing involves performing computations directly on the devices or on local gateways, rather than sending all raw data to a centralized cloud. This offers several benefits for time series:

Federated Learning is a distributed machine learning approach that complements edge computing. Instead of centralizing raw data, models are trained collaboratively across multiple decentralized edge devices or servers. Each device trains a local model on its own data, and only the model updates (not the raw data) are sent to a central server for aggregation. This aggregated model is then sent back to the devices for further local training. This approach is particularly valuable for time series data in privacy-sensitive domains like healthcare or personal activity monitoring, allowing insights to be gained without compromising individual data privacy.

As machine learning and deep learning models become more complex and powerful, their \"black-box\" nature becomes a significant concern, especially in high-stakes applications. Explainable AI (XAI) aims to make these models more transparent and understandable. For time series models:

Techniques like SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) are being adapted for time series data. For instance, SHAP values can highlight which specific time points or features (e.g., a sudden temperature drop, a specific stock market event) had the most significant impact on a forecasting model\'s output for a given prediction.

The vast amounts of time series data being collected raise critical ethical questions:

The most significant challenge is scaling. Traditional methods are designed for single-machine processing, but Big Data time series demand distributed computing. This shift requires expertise in distributed frameworks like Spark or Flink, understanding of scalable storage solutions like TSDBs, and adapting algorithms to run in parallel. It\'s not just about more data, but a fundamentally different architectural approach.

You should choose a specialized TSDB when you have high ingest rates (millions of data points per second), frequently perform time-range queries and aggregations, and require optimized storage and compression for time-stamped data. While general NoSQL databases can store time series, TSDBs are engineered for superior performance, cost-efficiency, and functionality specific to time series workloads, offering features like built-in downsampling and data retention policies.

Not always necessary, but increasingly important. Real-time analysis is crucial for applications requiring immediate action, such as fraud detection, critical infrastructure monitoring, or dynamic pricing. Batch processing remains relevant for comprehensive historical analysis, training complex machine learning models, generating periodic reports, and when latency is not a primary concern. Often, a hybrid approach (Lambda or Kappa architecture) that combines both is the most robust solution.

Ensuring data quality involves multiple steps: implementing robust validation rules at the data ingestion point (e.g., checking data types, ranges, missing values); using stream processing engines to perform real-time data cleansing and transformation; applying anomaly detection techniques to identify and flag erroneous sensor readings; and having monitoring systems in place to track data lineage and integrity throughout the pipeline. Proactive data governance is key.

Beyond traditional data science skills (statistics, machine learning, programming), a data scientist in this domain needs strong proficiency in distributed computing frameworks (e.g., Spark, Flink), experience with specialized time series databases, cloud computing platforms (AWS, Azure, GCP), stream processing concepts, and MLOps principles. Understanding of time series specific deep learning architectures (LSTMs, Transformers) is also highly valuable.

Cloud computing profoundly impacts big time series analysis by providing elastic scalability, managed services, and a pay-as-you-go model. It eliminates the need for heavy upfront infrastructure investments, allows dynamic scaling of resources to meet demand, and simplifies operations through managed services for data ingestion, storage, processing, and machine learning. This enables smaller teams to build and operate sophisticated, high-volume time series analytics platforms efficiently.

The journey through Time Series Analysis in the era of Big Data reveals a landscape transformed by the sheer volume, velocity, and variety of information. We\'ve moved far beyond traditional single-machine methods, embracing a sophisticated ecosystem of distributed computing frameworks, specialized databases, and advanced machine learning techniques. From the foundational principles of Apache Hadoop and the versatile power of Apache Spark to the low-latency capabilities of Apache Flink and the purpose-built efficiency of Time Series Databases like InfluxDB and TimescaleDB, the tools are now available to tackle even the most demanding time series challenges.

The shift towards real-time analytics, fueled by robust stream processing architectures and message queues like Kafka, empowers organizations to detect anomalies, forecast trends, and make critical decisions with unprecedented speed. Furthermore, the integration of advanced AI and deep learning models, capable of learning complex patterns across vast datasets, is unlocking deeper insights and driving automation. Cloud-native approaches, with their serverless functions and managed services, have democratized access to these powerful capabilities, reducing operational overhead and accelerating innovation.

As we look to 2024-2025 and beyond, the evolution continues with trends like edge computing, federated learning, and Explainable AI promising even more intelligent, efficient, and privacy-preserving time series solutions. However, with great power comes great responsibility. The imperative to address data privacy, mitigate algorithmic bias, and ensure responsible AI development will remain paramount. Organizations that successfully navigate this complex but rewarding domain will not only gain a significant competitive advantage but also contribute to building more resilient, efficient, and intelligent systems across every sector.

Embracing these Advanced Big Data Processing Methods for time series analysis is no longer an option but a strategic imperative. It is the key to unlocking the full potential of our data-rich world, transforming raw time-stamped events into actionable intelligence that drives progress and innovation.

Site Name: Hulul Academy for Student Services

Email: info@hululedu.com

Website: hululedu.com

مرحبًا بكم في hululedu.com، وجهتكم الأولى للتعلم الرقمي المبتكر. نحن منصة تعليمية تهدف إلى تمكين المتعلمين من جميع الأعمار من الوصول إلى محتوى تعليمي عالي الجودة، بطرق سهلة ومرنة، وبأسعار مناسبة. نوفر خدمات ودورات ومنتجات متميزة في مجالات متنوعة مثل: البرمجة، التصميم، اللغات، التطوير الذاتي،الأبحاث العلمية، مشاريع التخرج وغيرها الكثير . يعتمد منهجنا على الممارسات العملية والتطبيقية ليكون التعلم ليس فقط نظريًا بل عمليًا فعّالًا. رسالتنا هي بناء جسر بين المتعلم والطموح، بإلهام الشغف بالمعرفة وتقديم أدوات النجاح في سوق العمل الحديث.

ساعد الآخرين في اكتشاف هذا المحتوى القيم

استكشف المزيد من المحتوى المشابه

Master Time Series Analysis! Explore advanced big data processing methods for high-volume, real-time data. Learn scalable algorithms to transform your analytics.

Master Time Series Analysis! Explore advanced data warehousing methods, optimizing temporal data storage, design, and big data architectures for superior insights and efficient time series processing.

Unlock the power of data visualization for predictive modeling. Master effective techniques to interpret complex models, communicate insights visually, and transform your predictive analytics projects. Dive into advanced best practices and tools now!